Revenge of the Builders

17 Jan 2025I wanted to make a quick addendum to my last post in that I did some further experimenting with Data.ByteString.Builder on both the base16 encoding and decoding tasks, comparing results with the impure direct-allocation-and-write-based implementations I mentioned previously.

I had remarked that builders can be pretty efficient if you’re careful to pack your data aggressively, such that you don’t wind up needing to use too many builders in the first place. I decided to try minimising, as in objectively minimising, the number of builders required in both base16 encoding & decoding, to see what kind of performance I could squeeze out while sticking to the pure API. The builders didn’t disappoint.

How would one “objectively minimise” the number of builders required here? Simply by processing the biggest-sized chunk possible at a time, given we always want to write a Word64. If we can’t do that, we’ll write a Word32 and a Word16 and a Word8. If we can’t do that, we’ll write a Word32 and a Word16. And so on. We can figure all this out just by doing some checks on the length of the input bytestring when starting out: we trade some additional cheap arithmetic operations on machine integers up-front for fewer expensive builder allocations further down the line.

For encoding, this means doing the following checks:

encode :: BS.ByteString -> BS.ByteString

encode bs@(BI.PS _ _ l)

| l < 64 = to_strict_small loop

| otherwise = to_strict loop

where

loop

| l `rem` 4 == 0 = go64 bs

| (l - 3) `rem` 4 == 0 = case BS.splitAt (l - 3) bs of

(chunk, etc) ->

go64 chunk

<> go32 (BU.unsafeTake 2 etc)

<> go16 (BU.unsafeDrop 2 etc)

| (l - 2) `rem` 4 == 0 = case BS.splitAt (l - 2) bs of

(chunk, etc) -> go64 chunk <> go32 etc

| (l - 1) `rem` 4 == 0 = case BS.splitAt (l - 1) bs of

(chunk, etc) -> go64 chunk <> go16 etc

| l `rem` 2 == 0 = go32 bs

| (l - 1) `rem` 2 == 0 = case BS.splitAt (l - 1) bs of

(chunk, etc) -> go32 chunk <> go16 etc

| otherwise = go16 bs

where each ‘go’ function writes words with the indicated number of bits at a time, e.g.:

go64 b = case BS.splitAt 4 b of

(chunk, etc)

| BS.null chunk -> mempty

| otherwise ->

let !w16_0 = expand_w8 (BU.unsafeIndex chunk 0)

!w16_1 = expand_w8 (BU.unsafeIndex chunk 1)

!w16_2 = expand_w8 (BU.unsafeIndex chunk 2)

!w16_3 = expand_w8 (BU.unsafeIndex chunk 3)

!w64 = fi w16_0 `B.shiftL` 48

.|. fi w16_1 `B.shiftL` 32

.|. fi w16_2 `B.shiftL` 16

.|. fi w16_3

in BSB.word64BE w64 <> go64 etc

and where expand_w8 is just a variant of the previous ‘hilo’ function

that returns a Word16 directly, rather than a pair of Word8’s.

‘go32’ works on a chunk of size two, writing a single Word32, and ‘go16’ on a chunk of size one, writing a Word16. The point of having all these functions is that we can now always write the largest-sized word that we can, instead of writing Word8’s or Word16’s exclusively.

(Decoding works similarly, except we need more checks, and an additional ‘go8’ function to handle the one-byte case. I won’t paste the salad of conditionals required here.)

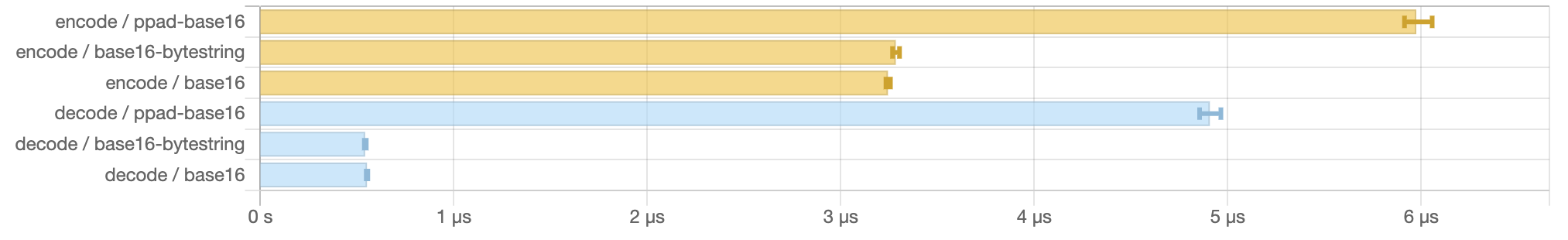

In any case, by employing this strategy we can come pretty close to the performance of the impure implementations. Here are some benchmark results for encoding a 1kb input:

benchmarking encode/ppad-base16

time 5.929 μs (5.847 μs .. 6.013 μs)

0.999 R² (0.998 R² .. 0.999 R²)

mean 5.975 μs (5.913 μs .. 6.057 μs)

std dev 233.1 ns (172.4 ns .. 310.0 ns)

benchmarking encode/base16-bytestring

time 3.246 μs (3.233 μs .. 3.262 μs)

1.000 R² (1.000 R² .. 1.000 R²)

mean 3.284 μs (3.268 μs .. 3.303 μs)

std dev 61.05 ns (47.83 ns .. 76.77 ns)

benchmarking encode/base16

time 3.236 μs (3.221 μs .. 3.253 μs)

1.000 R² (1.000 R² .. 1.000 R²)

mean 3.244 μs (3.233 μs .. 3.256 μs)

std dev 37.31 ns (31.87 ns .. 47.21 ns)

Case Allocated GCs

ppad-base16 (encode) 53,704 0

base16-bytestring (encode) 2,272 0

base16 (encode) 2,256 0

We’re allocating 25x more than the impure versions, but are only 2x slower or less. Here’s the decoding story:

benchmarking decode/ppad-base16

time 4.942 μs (4.884 μs .. 4.995 μs)

0.999 R² (0.998 R² .. 0.999 R²)

mean 4.908 μs (4.854 μs .. 4.964 μs)

std dev 176.8 ns (150.3 ns .. 214.3 ns)

variance introduced by outliers: 46% (moderately inflated)

benchmarking decode/base16-bytestring

time 540.8 ns (533.7 ns .. 548.2 ns)

0.999 R² (0.999 R² .. 0.999 R²)

mean 541.6 ns (536.9 ns .. 547.5 ns)

std dev 17.64 ns (13.87 ns .. 22.24 ns)

variance introduced by outliers: 47% (moderately inflated)

benchmarking decode/base16

time 555.8 ns (549.7 ns .. 560.9 ns)

0.999 R² (0.999 R² .. 1.000 R²)

mean 550.7 ns (546.7 ns .. 555.5 ns)

std dev 15.46 ns (13.11 ns .. 18.97 ns)

variance introduced by outliers: 39% (moderately inflated)

Case Allocated GCs

ppad-base16 (decode) 21,960 0

base16-bytestring (decode) 128 0

base16 (decode) 2,440 0

We’re allocating less (we’re writing less), but are closer to 10x slower. Not too bad, all things considered!

(N.b., it’s worth noting that the impure decoding functions also use what appears to be a more efficient lookup table to convert from hex characters back to Word8, so that may account for some of the differential there.)